# Import all required libraries

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandardScaler, MaxAbsScaler, MinMaxScaler

from sklearn.impute import SimpleImputer

import statsmodels.api as sm

import scipy.stats as stats

# Increase font size of all Seaborn plot elements

sns.set(font_scale = 1.25)

# Set Seaborn theme

sns.set_theme(style = "whitegrid")Data preprocessing

Lecture 6

University of Arizona

INFO 511

Setup

Data preprocessing

Data preprocessing

Data preprocessing refers to the manipulation, filtration, or augmentation of data before it is analyzed. It is a crucial step in the data science process.

It’s essentially data cleaning.

Dataset

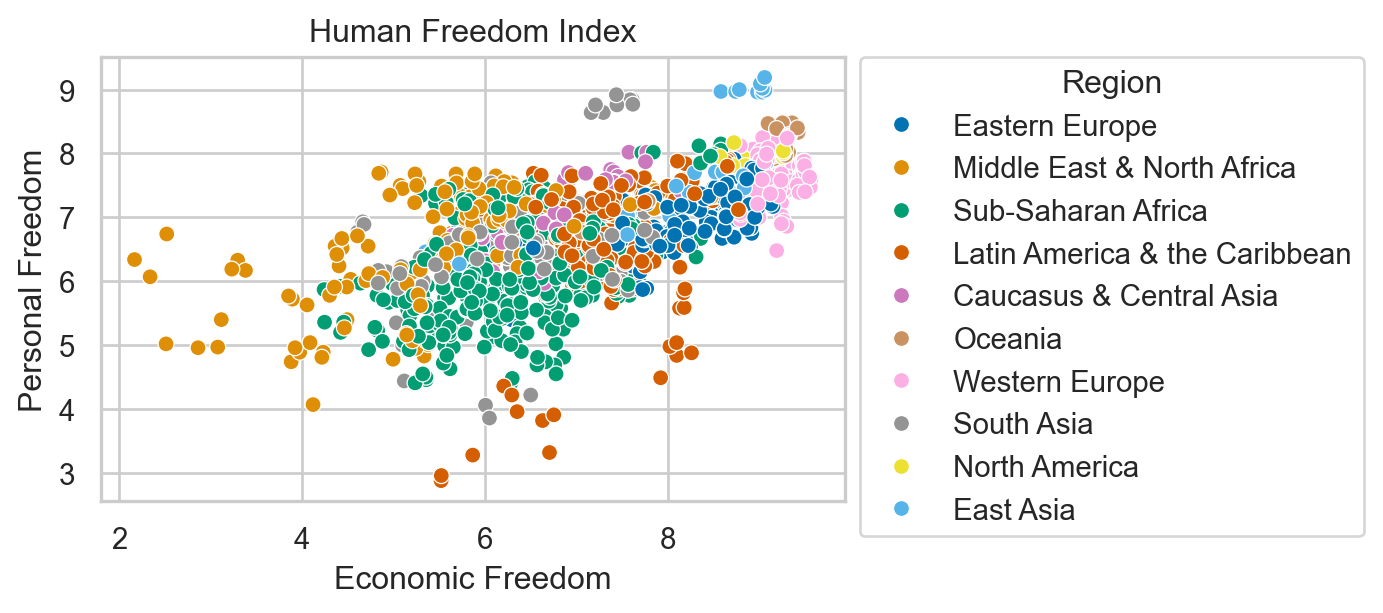

Human Freedom Index

The Human Freedom Index is a report that attempts to summarize the idea of “freedom” through variables for many countries around the globe.

Question

What trends are there within human freedom indices in different regions?

Dataset: Human Freedom Index

| year | ISO_code | countries | region | pf_rol_procedural | pf_rol_civil | pf_rol_criminal | pf_rol | pf_ss_homicide | pf_ss_disappearances_disap | ... | ef_regulation_business_bribes | ef_regulation_business_licensing | ef_regulation_business_compliance | ef_regulation_business | ef_regulation | ef_score | ef_rank | hf_score | hf_rank | hf_quartile | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2016 | ALB | Albania | Eastern Europe | 6.661503 | 4.547244 | 4.666508 | 5.291752 | 8.920429 | 10.0 | ... | 4.050196 | 7.324582 | 7.074366 | 6.705863 | 6.906901 | 7.54 | 34.0 | 7.568140 | 48.0 | 2.0 |

| 1 | 2016 | DZA | Algeria | Middle East & North Africa | NaN | NaN | NaN | 3.819566 | 9.456254 | 10.0 | ... | 3.765515 | 8.523503 | 7.029528 | 5.676956 | 5.268992 | 4.99 | 159.0 | 5.135886 | 155.0 | 4.0 |

| 2 | 2016 | AGO | Angola | Sub-Saharan Africa | NaN | NaN | NaN | 3.451814 | 8.060260 | 5.0 | ... | 1.945540 | 8.096776 | 6.782923 | 4.930271 | 5.518500 | 5.17 | 155.0 | 5.640662 | 142.0 | 4.0 |

| 3 | 2016 | ARG | Argentina | Latin America & the Caribbean | 7.098483 | 5.791960 | 4.343930 | 5.744791 | 7.622974 | 10.0 | ... | 3.260044 | 5.253411 | 6.508295 | 5.535831 | 5.369019 | 4.84 | 160.0 | 6.469848 | 107.0 | 3.0 |

| 4 | 2016 | ARM | Armenia | Caucasus & Central Asia | NaN | NaN | NaN | 5.003205 | 8.808750 | 10.0 | ... | 4.575152 | 9.319612 | 6.491481 | 6.797530 | 7.378069 | 7.57 | 29.0 | 7.241402 | 57.0 | 2.0 |

5 rows × 123 columns

Understanding the data

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1458 entries, 0 to 1457

Data columns (total 123 columns):

# Column Dtype

--- ------ -----

0 year int64

1 ISO_code object

2 countries object

3 region object

4 pf_rol_procedural float64

5 pf_rol_civil float64

6 pf_rol_criminal float64

7 pf_rol float64

8 pf_ss_homicide float64

9 pf_ss_disappearances_disap float64

10 pf_ss_disappearances_violent float64

11 pf_ss_disappearances_organized float64

12 pf_ss_disappearances_fatalities float64

13 pf_ss_disappearances_injuries float64

14 pf_ss_disappearances float64

15 pf_ss_women_fgm float64

16 pf_ss_women_missing float64

17 pf_ss_women_inheritance_widows float64

18 pf_ss_women_inheritance_daughters float64

19 pf_ss_women_inheritance float64

20 pf_ss_women float64

21 pf_ss float64

22 pf_movement_domestic float64

23 pf_movement_foreign float64

24 pf_movement_women float64

25 pf_movement float64

26 pf_religion_estop_establish float64

27 pf_religion_estop_operate float64

28 pf_religion_estop float64

29 pf_religion_harassment float64

30 pf_religion_restrictions float64

31 pf_religion float64

32 pf_association_association float64

33 pf_association_assembly float64

34 pf_association_political_establish float64

35 pf_association_political_operate float64

36 pf_association_political float64

37 pf_association_prof_establish float64

38 pf_association_prof_operate float64

39 pf_association_prof float64

40 pf_association_sport_establish float64

41 pf_association_sport_operate float64

42 pf_association_sport float64

43 pf_association float64

44 pf_expression_killed float64

45 pf_expression_jailed float64

46 pf_expression_influence float64

47 pf_expression_control float64

48 pf_expression_cable float64

49 pf_expression_newspapers float64

50 pf_expression_internet float64

51 pf_expression float64

52 pf_identity_legal float64

53 pf_identity_parental_marriage float64

54 pf_identity_parental_divorce float64

55 pf_identity_parental float64

56 pf_identity_sex_male float64

57 pf_identity_sex_female float64

58 pf_identity_sex float64

59 pf_identity_divorce float64

60 pf_identity float64

61 pf_score float64

62 pf_rank float64

63 ef_government_consumption float64

64 ef_government_transfers float64

65 ef_government_enterprises float64

66 ef_government_tax_income float64

67 ef_government_tax_payroll float64

68 ef_government_tax float64

69 ef_government float64

70 ef_legal_judicial float64

71 ef_legal_courts float64

72 ef_legal_protection float64

73 ef_legal_military float64

74 ef_legal_integrity float64

75 ef_legal_enforcement float64

76 ef_legal_restrictions float64

77 ef_legal_police float64

78 ef_legal_crime float64

79 ef_legal_gender float64

80 ef_legal float64

81 ef_money_growth float64

82 ef_money_sd float64

83 ef_money_inflation float64

84 ef_money_currency float64

85 ef_money float64

86 ef_trade_tariffs_revenue float64

87 ef_trade_tariffs_mean float64

88 ef_trade_tariffs_sd float64

89 ef_trade_tariffs float64

90 ef_trade_regulatory_nontariff float64

91 ef_trade_regulatory_compliance float64

92 ef_trade_regulatory float64

93 ef_trade_black float64

94 ef_trade_movement_foreign float64

95 ef_trade_movement_capital float64

96 ef_trade_movement_visit float64

97 ef_trade_movement float64

98 ef_trade float64

99 ef_regulation_credit_ownership float64

100 ef_regulation_credit_private float64

101 ef_regulation_credit_interest float64

102 ef_regulation_credit float64

103 ef_regulation_labor_minwage float64

104 ef_regulation_labor_firing float64

105 ef_regulation_labor_bargain float64

106 ef_regulation_labor_hours float64

107 ef_regulation_labor_dismissal float64

108 ef_regulation_labor_conscription float64

109 ef_regulation_labor float64

110 ef_regulation_business_adm float64

111 ef_regulation_business_bureaucracy float64

112 ef_regulation_business_start float64

113 ef_regulation_business_bribes float64

114 ef_regulation_business_licensing float64

115 ef_regulation_business_compliance float64

116 ef_regulation_business float64

117 ef_regulation float64

118 ef_score float64

119 ef_rank float64

120 hf_score float64

121 hf_rank float64

122 hf_quartile float64

dtypes: float64(119), int64(1), object(3)

memory usage: 1.4+ MB| year | pf_rol_procedural | pf_rol_civil | pf_rol_criminal | pf_rol | pf_ss_homicide | pf_ss_disappearances_disap | pf_ss_disappearances_violent | pf_ss_disappearances_organized | pf_ss_disappearances_fatalities | ... | ef_regulation_business_bribes | ef_regulation_business_licensing | ef_regulation_business_compliance | ef_regulation_business | ef_regulation | ef_score | ef_rank | hf_score | hf_rank | hf_quartile | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 1458.000000 | 880.000000 | 880.000000 | 880.000000 | 1378.000000 | 1378.000000 | 1369.000000 | 1378.000000 | 1279.000000 | 1378.000000 | ... | 1283.000000 | 1357.000000 | 1368.000000 | 1374.000000 | 1378.000000 | 1378.000000 | 1378.000000 | 1378.000000 | 1378.000000 | 1378.000000 |

| mean | 2012.000000 | 5.589355 | 5.474770 | 5.044070 | 5.309641 | 7.412980 | 8.341855 | 9.519458 | 6.772869 | 9.584972 | ... | 4.886192 | 7.698494 | 6.981858 | 6.317668 | 7.019782 | 6.785610 | 76.973149 | 6.993444 | 77.007983 | 2.490566 |

| std | 2.582875 | 2.080957 | 1.428494 | 1.724886 | 1.529310 | 2.832947 | 3.225902 | 1.744673 | 2.768983 | 1.559826 | ... | 1.889168 | 1.728507 | 1.979200 | 1.230988 | 1.027625 | 0.883601 | 44.540142 | 1.025811 | 44.506549 | 1.119698 |

| min | 2008.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | ... | 0.000000 | 0.000000 | 0.000000 | 2.009841 | 2.483540 | 2.880000 | 1.000000 | 3.765827 | 1.000000 | 1.000000 |

| 25% | 2010.000000 | 4.133333 | 4.549550 | 3.789724 | 4.131746 | 6.386978 | 10.000000 | 10.000000 | 5.000000 | 9.942607 | ... | 3.433786 | 6.874687 | 6.368178 | 5.591851 | 6.429498 | 6.250000 | 38.000000 | 6.336685 | 39.000000 | 1.000000 |

| 50% | 2012.000000 | 5.300000 | 5.300000 | 4.575189 | 4.910797 | 8.638278 | 10.000000 | 10.000000 | 7.500000 | 10.000000 | ... | 4.418371 | 8.074161 | 7.466692 | 6.265234 | 7.082075 | 6.900000 | 77.000000 | 6.923840 | 76.000000 | 2.000000 |

| 75% | 2014.000000 | 7.389499 | 6.410975 | 6.400000 | 6.513178 | 9.454402 | 10.000000 | 10.000000 | 10.000000 | 10.000000 | ... | 6.227978 | 8.991882 | 8.209310 | 7.139718 | 7.720955 | 7.410000 | 115.000000 | 7.894660 | 115.000000 | 3.000000 |

| max | 2016.000000 | 9.700000 | 8.773533 | 8.719848 | 8.723094 | 9.926568 | 10.000000 | 10.000000 | 10.000000 | 10.000000 | ... | 9.623811 | 9.999638 | 9.865488 | 9.272600 | 9.439828 | 9.190000 | 162.000000 | 9.126313 | 162.000000 | 4.000000 |

8 rows × 120 columns

Identifying missing values

year 0

ISO_code 0

countries 0

region 0

pf_rol_procedural 578

...

ef_score 80

ef_rank 80

hf_score 80

hf_rank 80

hf_quartile 80

Length: 123, dtype: int64A lot of missing values 🙃

Data cleaning

Handling missing data

Options

Do nothing…

Remove them

Imputate

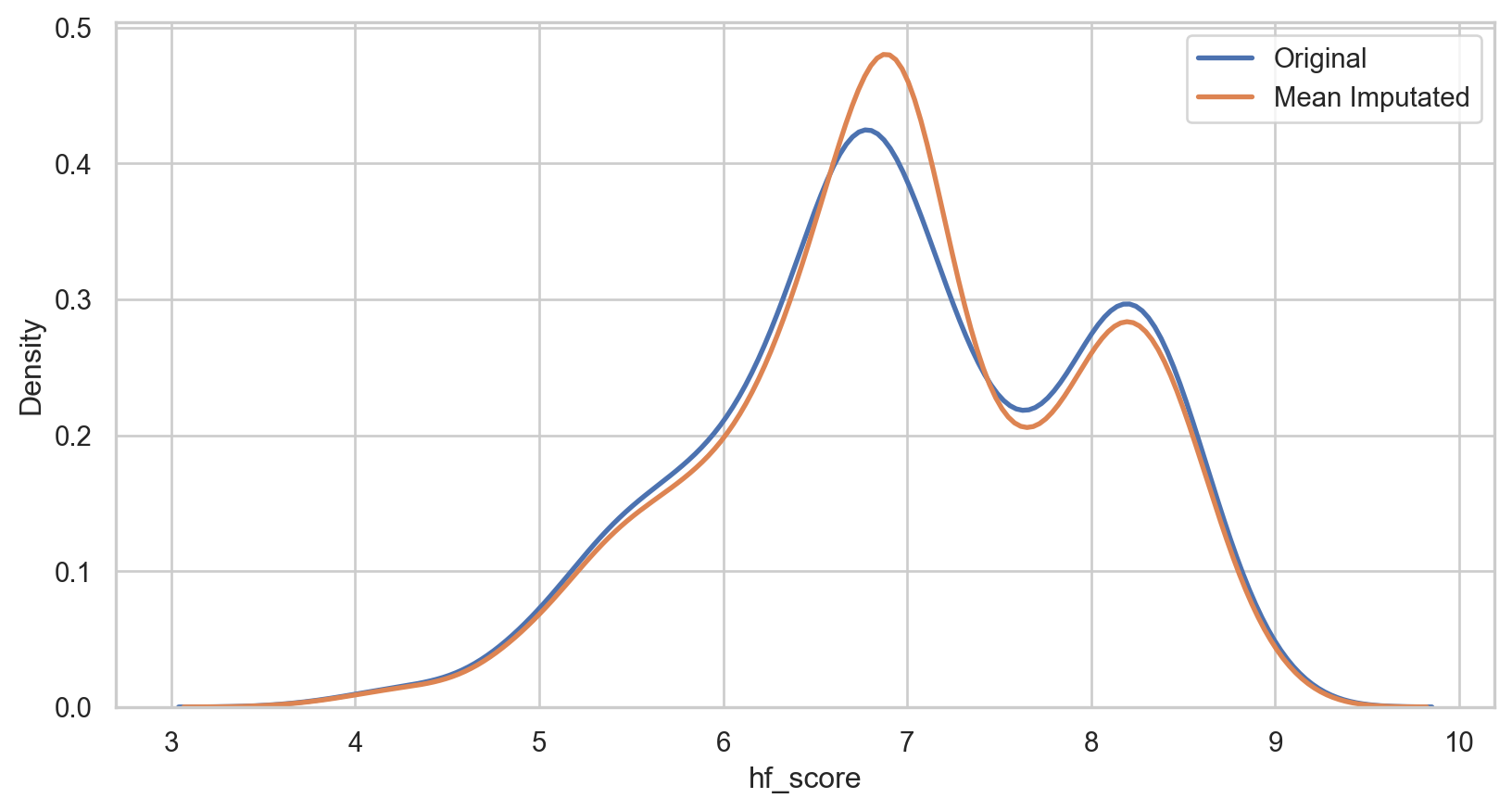

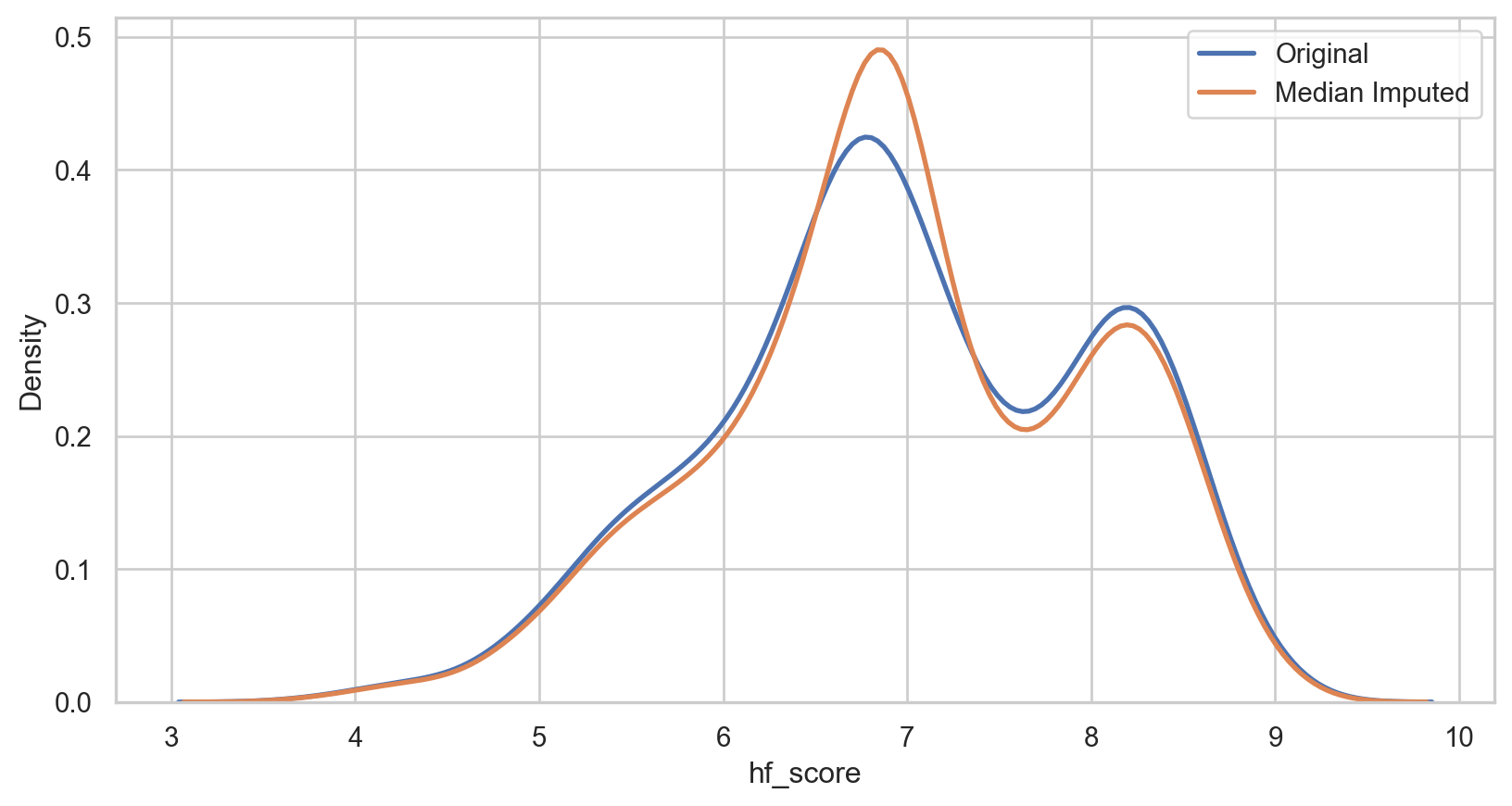

We will use the hf_score from hfi: 80 missing values

Imputation

In statistics, imputation is the process of replacing missing data with substituted values.

Mean imputation

Median imputation

Mode imputation

Mean imputation

How it Works: Replace missing values with the arithmetic mean of the non-missing values in the same variable.

Pros:

- Easy and fast.

- Works well with small numerical datasets

Cons:

- It only works on the column level.

- Will give poor results on encoded categorical features.

- Not very accurate.

- Doesn’t account for the uncertainty in the imputations.

hfi_copy = hfi.copy()

mean_imputer = SimpleImputer(strategy = 'mean')

hfi_copy['mean_hf_score'] = mean_imputer.fit_transform(hfi_copy[['hf_score']])

mean_plot = sns.kdeplot(data = hfi_copy, x = 'hf_score', linewidth = 2, label = "Original")

mean_plot = sns.kdeplot(data = hfi_copy, x = 'mean_hf_score', linewidth = 2, label = "Mean Imputed")

plt.legend()

plt.show()Median imputation

How it Works: Replace missing values with the arithmetic median of the non-missing values in the same variable.

Pros (same as mean):

- Easy and fast.

- Works well with small numerical datasets

Cons (same as mean):

- It only works on the column level.

- Will give poor results on encoded categorical features.

- Not very accurate.

- Doesn’t account for the uncertainty in the imputations.

hfi_copy = hfi.copy()

median_imputer = SimpleImputer(strategy = 'median')

hfi_copy['median_hf_score'] = median_imputer.fit_transform(hfi_copy[['hf_score']])

median_plot = sns.kdeplot(data = hfi_copy, x = 'hf_score', linewidth = 2, label = "Original")

median_plot = sns.kdeplot(data = hfi_copy, x = 'median_hf_score', linewidth = 2, label = "Median Imputed")

plt.legend()

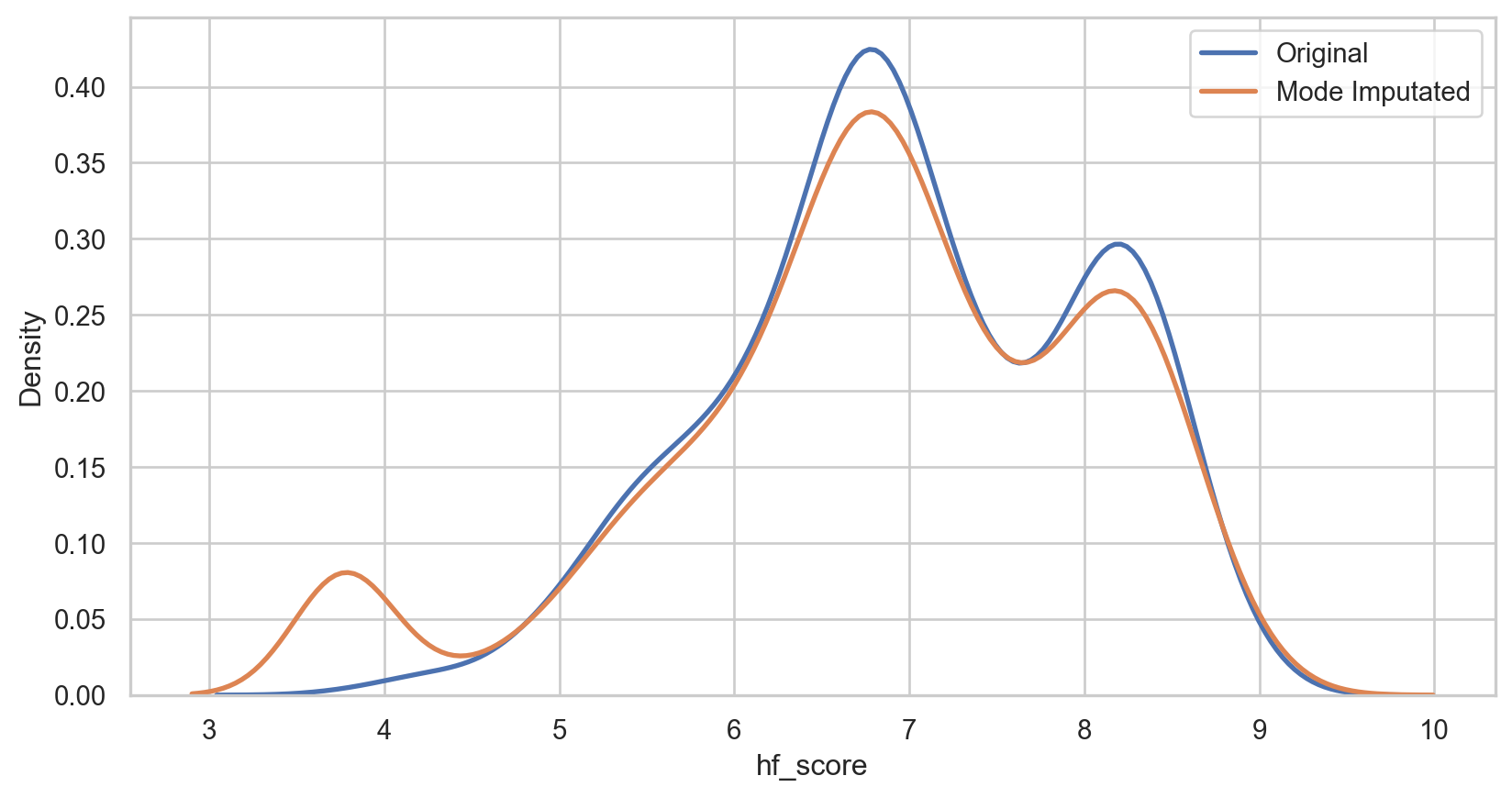

plt.show()Mode imputation

How it Works: Replace missing values with the mode of the non-missing values in the same variable.

Pros

- Easy and fast.

- Works well with categorical features.

Cons:

- Doesn’t factor the correlations between features.

- Can introduce bias in the data.

hfi_copy = hfi.copy()

mode_imputer = SimpleImputer(strategy = 'most_frequent')

hfi_copy['mode_hf_score'] = mode_imputer.fit_transform(hfi_copy[['hf_score']])

mode_plot = sns.kdeplot(data = hfi_copy, x = 'hf_score', linewidth = 2, label = "Original")

mode_plot = sns.kdeplot(data = hfi_copy, x = 'mode_hf_score', linewidth = 2, label = "Mode Imputated")

plt.legend()

plt.show()Data type conversion

Logical operators

| operator | definition |

|---|---|

< |

is less than? |

<= |

is less than or equal to? |

> |

is greater than? |

>= |

is greater than or equal to? |

== |

is exactly equal to? |

!= |

is not equal to? |

Logical operators cont.

| operator | definition |

|---|---|

x and y |

is x AND y? |

x or y |

is x OR y? |

x is None |

is x None? |

x is not None |

is x not None? |

x in y |

is x in y? |

x not in y |

is x not in y? |

not x |

is not x? (only makes sense if x is True or False) |

Looking at our data

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1458 entries, 0 to 1457

Data columns (total 126 columns):

# Column Dtype

--- ------ -----

0 year int64

1 ISO_code object

2 countries object

3 region object

4 pf_rol_procedural float64

5 pf_rol_civil float64

6 pf_rol_criminal float64

7 pf_rol float64

8 pf_ss_homicide float64

9 pf_ss_disappearances_disap float64

10 pf_ss_disappearances_violent float64

11 pf_ss_disappearances_organized float64

12 pf_ss_disappearances_fatalities float64

13 pf_ss_disappearances_injuries float64

14 pf_ss_disappearances float64

15 pf_ss_women_fgm float64

16 pf_ss_women_missing float64

17 pf_ss_women_inheritance_widows float64

18 pf_ss_women_inheritance_daughters float64

19 pf_ss_women_inheritance float64

20 pf_ss_women float64

21 pf_ss float64

22 pf_movement_domestic float64

23 pf_movement_foreign float64

24 pf_movement_women float64

25 pf_movement float64

26 pf_religion_estop_establish float64

27 pf_religion_estop_operate float64

28 pf_religion_estop float64

29 pf_religion_harassment float64

30 pf_religion_restrictions float64

31 pf_religion float64

32 pf_association_association float64

33 pf_association_assembly float64

34 pf_association_political_establish float64

35 pf_association_political_operate float64

36 pf_association_political float64

37 pf_association_prof_establish float64

38 pf_association_prof_operate float64

39 pf_association_prof float64

40 pf_association_sport_establish float64

41 pf_association_sport_operate float64

42 pf_association_sport float64

43 pf_association float64

44 pf_expression_killed float64

45 pf_expression_jailed float64

46 pf_expression_influence float64

47 pf_expression_control float64

48 pf_expression_cable float64

49 pf_expression_newspapers float64

50 pf_expression_internet float64

51 pf_expression float64

52 pf_identity_legal float64

53 pf_identity_parental_marriage float64

54 pf_identity_parental_divorce float64

55 pf_identity_parental float64

56 pf_identity_sex_male float64

57 pf_identity_sex_female float64

58 pf_identity_sex float64

59 pf_identity_divorce float64

60 pf_identity float64

61 pf_score float64

62 pf_rank float64

63 ef_government_consumption float64

64 ef_government_transfers float64

65 ef_government_enterprises float64

66 ef_government_tax_income float64

67 ef_government_tax_payroll float64

68 ef_government_tax float64

69 ef_government float64

70 ef_legal_judicial float64

71 ef_legal_courts float64

72 ef_legal_protection float64

73 ef_legal_military float64

74 ef_legal_integrity float64

75 ef_legal_enforcement float64

76 ef_legal_restrictions float64

77 ef_legal_police float64

78 ef_legal_crime float64

79 ef_legal_gender float64

80 ef_legal float64

81 ef_money_growth float64

82 ef_money_sd float64

83 ef_money_inflation float64

84 ef_money_currency float64

85 ef_money float64

86 ef_trade_tariffs_revenue float64

87 ef_trade_tariffs_mean float64

88 ef_trade_tariffs_sd float64

89 ef_trade_tariffs float64

90 ef_trade_regulatory_nontariff float64

91 ef_trade_regulatory_compliance float64

92 ef_trade_regulatory float64

93 ef_trade_black float64

94 ef_trade_movement_foreign float64

95 ef_trade_movement_capital float64

96 ef_trade_movement_visit float64

97 ef_trade_movement float64

98 ef_trade float64

99 ef_regulation_credit_ownership float64

100 ef_regulation_credit_private float64

101 ef_regulation_credit_interest float64

102 ef_regulation_credit float64

103 ef_regulation_labor_minwage float64

104 ef_regulation_labor_firing float64

105 ef_regulation_labor_bargain float64

106 ef_regulation_labor_hours float64

107 ef_regulation_labor_dismissal float64

108 ef_regulation_labor_conscription float64

109 ef_regulation_labor float64

110 ef_regulation_business_adm float64

111 ef_regulation_business_bureaucracy float64

112 ef_regulation_business_start float64

113 ef_regulation_business_bribes float64

114 ef_regulation_business_licensing float64

115 ef_regulation_business_compliance float64

116 ef_regulation_business float64

117 ef_regulation float64

118 ef_score float64

119 ef_rank float64

120 hf_score float64

121 hf_rank float64

122 hf_quartile float64

123 mean_hf_score float64

124 median_hf_score float64

125 mode_hf_score float64

dtypes: float64(122), int64(1), object(3)

memory usage: 1.4+ MBConverting year to Datetime

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1458 entries, 0 to 1457

Data columns (total 126 columns):

# Column Dtype

--- ------ -----

0 year datetime64[ns]

1 ISO_code object

2 countries object

3 region object

4 pf_rol_procedural float64

5 pf_rol_civil float64

6 pf_rol_criminal float64

7 pf_rol float64

8 pf_ss_homicide float64

9 pf_ss_disappearances_disap float64

10 pf_ss_disappearances_violent float64

11 pf_ss_disappearances_organized float64

12 pf_ss_disappearances_fatalities float64

13 pf_ss_disappearances_injuries float64

14 pf_ss_disappearances float64

15 pf_ss_women_fgm float64

16 pf_ss_women_missing float64

17 pf_ss_women_inheritance_widows float64

18 pf_ss_women_inheritance_daughters float64

19 pf_ss_women_inheritance float64

20 pf_ss_women float64

21 pf_ss float64

22 pf_movement_domestic float64

23 pf_movement_foreign float64

24 pf_movement_women float64

25 pf_movement float64

26 pf_religion_estop_establish float64

27 pf_religion_estop_operate float64

28 pf_religion_estop float64

29 pf_religion_harassment float64

30 pf_religion_restrictions float64

31 pf_religion float64

32 pf_association_association float64

33 pf_association_assembly float64

34 pf_association_political_establish float64

35 pf_association_political_operate float64

36 pf_association_political float64

37 pf_association_prof_establish float64

38 pf_association_prof_operate float64

39 pf_association_prof float64

40 pf_association_sport_establish float64

41 pf_association_sport_operate float64

42 pf_association_sport float64

43 pf_association float64

44 pf_expression_killed float64

45 pf_expression_jailed float64

46 pf_expression_influence float64

47 pf_expression_control float64

48 pf_expression_cable float64

49 pf_expression_newspapers float64

50 pf_expression_internet float64

51 pf_expression float64

52 pf_identity_legal float64

53 pf_identity_parental_marriage float64

54 pf_identity_parental_divorce float64

55 pf_identity_parental float64

56 pf_identity_sex_male float64

57 pf_identity_sex_female float64

58 pf_identity_sex float64

59 pf_identity_divorce float64

60 pf_identity float64

61 pf_score float64

62 pf_rank float64

63 ef_government_consumption float64

64 ef_government_transfers float64

65 ef_government_enterprises float64

66 ef_government_tax_income float64

67 ef_government_tax_payroll float64

68 ef_government_tax float64

69 ef_government float64

70 ef_legal_judicial float64

71 ef_legal_courts float64

72 ef_legal_protection float64

73 ef_legal_military float64

74 ef_legal_integrity float64

75 ef_legal_enforcement float64

76 ef_legal_restrictions float64

77 ef_legal_police float64

78 ef_legal_crime float64

79 ef_legal_gender float64

80 ef_legal float64

81 ef_money_growth float64

82 ef_money_sd float64

83 ef_money_inflation float64

84 ef_money_currency float64

85 ef_money float64

86 ef_trade_tariffs_revenue float64

87 ef_trade_tariffs_mean float64

88 ef_trade_tariffs_sd float64

89 ef_trade_tariffs float64

90 ef_trade_regulatory_nontariff float64

91 ef_trade_regulatory_compliance float64

92 ef_trade_regulatory float64

93 ef_trade_black float64

94 ef_trade_movement_foreign float64

95 ef_trade_movement_capital float64

96 ef_trade_movement_visit float64

97 ef_trade_movement float64

98 ef_trade float64

99 ef_regulation_credit_ownership float64

100 ef_regulation_credit_private float64

101 ef_regulation_credit_interest float64

102 ef_regulation_credit float64

103 ef_regulation_labor_minwage float64

104 ef_regulation_labor_firing float64

105 ef_regulation_labor_bargain float64

106 ef_regulation_labor_hours float64

107 ef_regulation_labor_dismissal float64

108 ef_regulation_labor_conscription float64

109 ef_regulation_labor float64

110 ef_regulation_business_adm float64

111 ef_regulation_business_bureaucracy float64

112 ef_regulation_business_start float64

113 ef_regulation_business_bribes float64

114 ef_regulation_business_licensing float64

115 ef_regulation_business_compliance float64

116 ef_regulation_business float64

117 ef_regulation float64

118 ef_score float64

119 ef_rank float64

120 hf_score float64

121 hf_rank float64

122 hf_quartile float64

123 mean_hf_score float64

124 median_hf_score float64

125 mode_hf_score float64

dtypes: datetime64[ns](1), float64(122), object(3)

memory usage: 1.4+ MB

NoneConverting ISO_code, countries, and region to Categorical

hfi_copy['ISO_code'] = hfi_copy['ISO_code'].astype('category')

hfi_copy['countries'] = hfi_copy['countries'].astype('category')

hfi_copy['region'] = hfi_copy['region'].astype('category')

print(hfi_copy.info(verbose=True))<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1458 entries, 0 to 1457

Data columns (total 126 columns):

# Column Dtype

--- ------ -----

0 year datetime64[ns]

1 ISO_code category

2 countries category

3 region category

4 pf_rol_procedural float64

5 pf_rol_civil float64

6 pf_rol_criminal float64

7 pf_rol float64

8 pf_ss_homicide float64

9 pf_ss_disappearances_disap float64

10 pf_ss_disappearances_violent float64

11 pf_ss_disappearances_organized float64

12 pf_ss_disappearances_fatalities float64

13 pf_ss_disappearances_injuries float64

14 pf_ss_disappearances float64

15 pf_ss_women_fgm float64

16 pf_ss_women_missing float64

17 pf_ss_women_inheritance_widows float64

18 pf_ss_women_inheritance_daughters float64

19 pf_ss_women_inheritance float64

20 pf_ss_women float64

21 pf_ss float64

22 pf_movement_domestic float64

23 pf_movement_foreign float64

24 pf_movement_women float64

25 pf_movement float64

26 pf_religion_estop_establish float64

27 pf_religion_estop_operate float64

28 pf_religion_estop float64

29 pf_religion_harassment float64

30 pf_religion_restrictions float64

31 pf_religion float64

32 pf_association_association float64

33 pf_association_assembly float64

34 pf_association_political_establish float64

35 pf_association_political_operate float64

36 pf_association_political float64

37 pf_association_prof_establish float64

38 pf_association_prof_operate float64

39 pf_association_prof float64

40 pf_association_sport_establish float64

41 pf_association_sport_operate float64

42 pf_association_sport float64

43 pf_association float64

44 pf_expression_killed float64

45 pf_expression_jailed float64

46 pf_expression_influence float64

47 pf_expression_control float64

48 pf_expression_cable float64

49 pf_expression_newspapers float64

50 pf_expression_internet float64

51 pf_expression float64

52 pf_identity_legal float64

53 pf_identity_parental_marriage float64

54 pf_identity_parental_divorce float64

55 pf_identity_parental float64

56 pf_identity_sex_male float64

57 pf_identity_sex_female float64

58 pf_identity_sex float64

59 pf_identity_divorce float64

60 pf_identity float64

61 pf_score float64

62 pf_rank float64

63 ef_government_consumption float64

64 ef_government_transfers float64

65 ef_government_enterprises float64

66 ef_government_tax_income float64

67 ef_government_tax_payroll float64

68 ef_government_tax float64

69 ef_government float64

70 ef_legal_judicial float64

71 ef_legal_courts float64

72 ef_legal_protection float64

73 ef_legal_military float64

74 ef_legal_integrity float64

75 ef_legal_enforcement float64

76 ef_legal_restrictions float64

77 ef_legal_police float64

78 ef_legal_crime float64

79 ef_legal_gender float64

80 ef_legal float64

81 ef_money_growth float64

82 ef_money_sd float64

83 ef_money_inflation float64

84 ef_money_currency float64

85 ef_money float64

86 ef_trade_tariffs_revenue float64

87 ef_trade_tariffs_mean float64

88 ef_trade_tariffs_sd float64

89 ef_trade_tariffs float64

90 ef_trade_regulatory_nontariff float64

91 ef_trade_regulatory_compliance float64

92 ef_trade_regulatory float64

93 ef_trade_black float64

94 ef_trade_movement_foreign float64

95 ef_trade_movement_capital float64

96 ef_trade_movement_visit float64

97 ef_trade_movement float64

98 ef_trade float64

99 ef_regulation_credit_ownership float64

100 ef_regulation_credit_private float64

101 ef_regulation_credit_interest float64

102 ef_regulation_credit float64

103 ef_regulation_labor_minwage float64

104 ef_regulation_labor_firing float64

105 ef_regulation_labor_bargain float64

106 ef_regulation_labor_hours float64

107 ef_regulation_labor_dismissal float64

108 ef_regulation_labor_conscription float64

109 ef_regulation_labor float64

110 ef_regulation_business_adm float64

111 ef_regulation_business_bureaucracy float64

112 ef_regulation_business_start float64

113 ef_regulation_business_bribes float64

114 ef_regulation_business_licensing float64

115 ef_regulation_business_compliance float64

116 ef_regulation_business float64

117 ef_regulation float64

118 ef_score float64

119 ef_rank float64

120 hf_score float64

121 hf_rank float64

122 hf_quartile float64

123 mean_hf_score float64

124 median_hf_score float64

125 mode_hf_score float64

dtypes: category(3), datetime64[ns](1), float64(122)

memory usage: 1.4 MB

NoneCreating a boolean column from hf_score

Let’s say we want to know whether countries are “free” or not.

Filtering + subsetting

Filter out True values

filtered_hfi_copy = hfi_copy[hfi_copy['hf_score_above_threshold'] == True][['hf_score_above_threshold', 'countries']]

print(filtered_hfi_copy[['hf_score_above_threshold', 'countries']]) hf_score_above_threshold countries

5 True Australia

27 True Canada

41 True Denmark

63 True Hong Kong

70 True Ireland

... ... ...

1359 True Hong Kong

1403 True New Zealand

1407 True Norway

1436 True Switzerland

1450 True United Kingdom

[83 rows x 2 columns]That’s a lot of data… 🤔

Filter out True values for newest year

newest_year = hfi_copy['year'].max()

filtered_hfi_copy = hfi_copy[(hfi_copy['year'] == newest_year) & (hfi_copy['hf_score_above_threshold'] == True)]

result = filtered_hfi_copy[['hf_score_above_threshold', 'countries']]

print(result) hf_score_above_threshold countries

5 True Australia

27 True Canada

41 True Denmark

63 True Hong Kong

70 True Ireland

106 True Netherlands

107 True New Zealand

140 True SwitzerlandFiltering categories

options = ['United States', 'India', 'Canada', 'China']

filtered_hfi = hfi[hfi['countries'].isin(options)]

filtered_hfi['countries'] = filtered_hfi['countries'].cat.remove_unused_categories()

unique_countries = filtered_hfi['countries'].unique()

print(unique_countries)['Canada', 'China', 'India', 'United States']

Categories (4, object): ['Canada', 'China', 'India', 'United States']Transformations

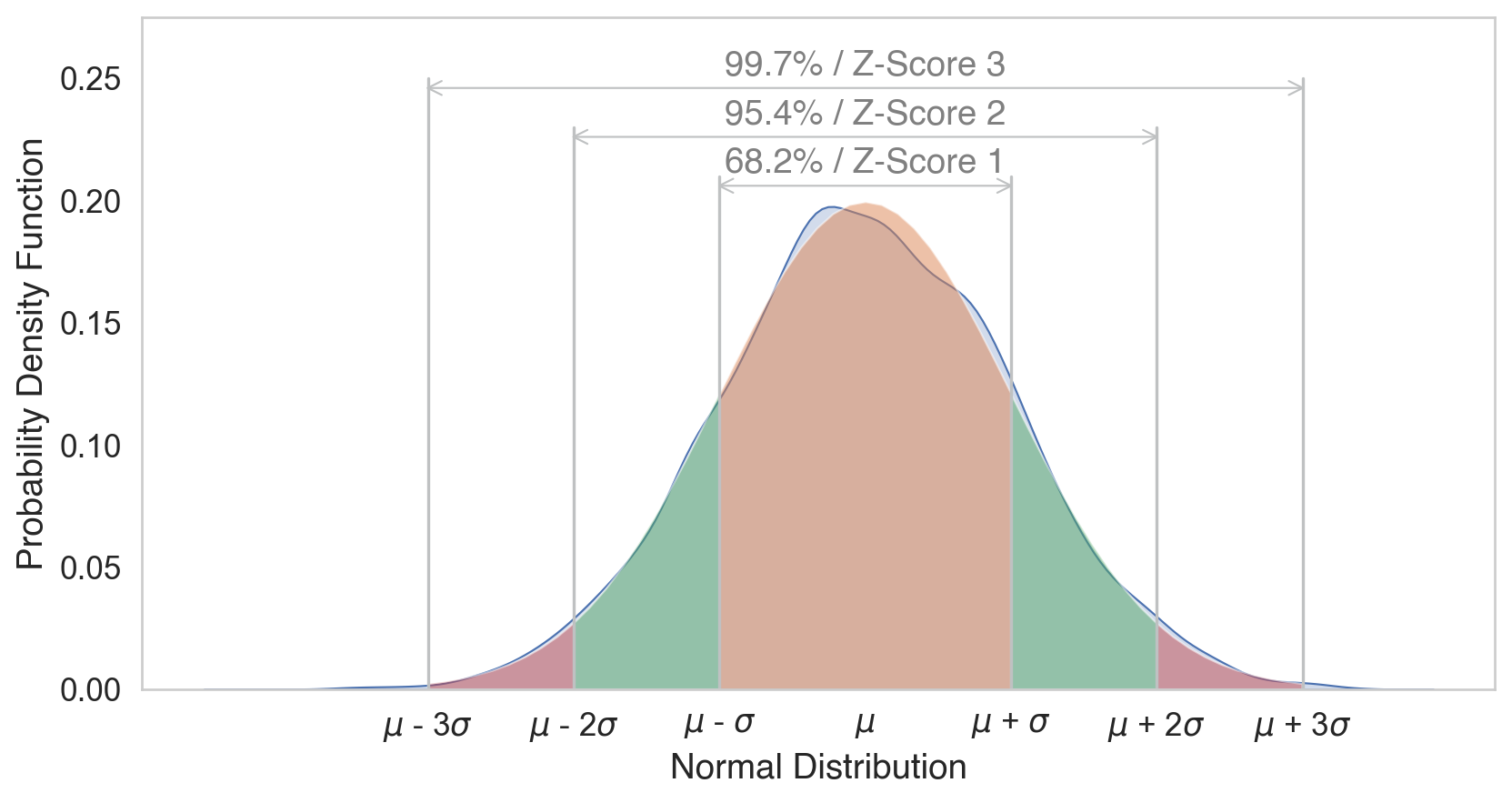

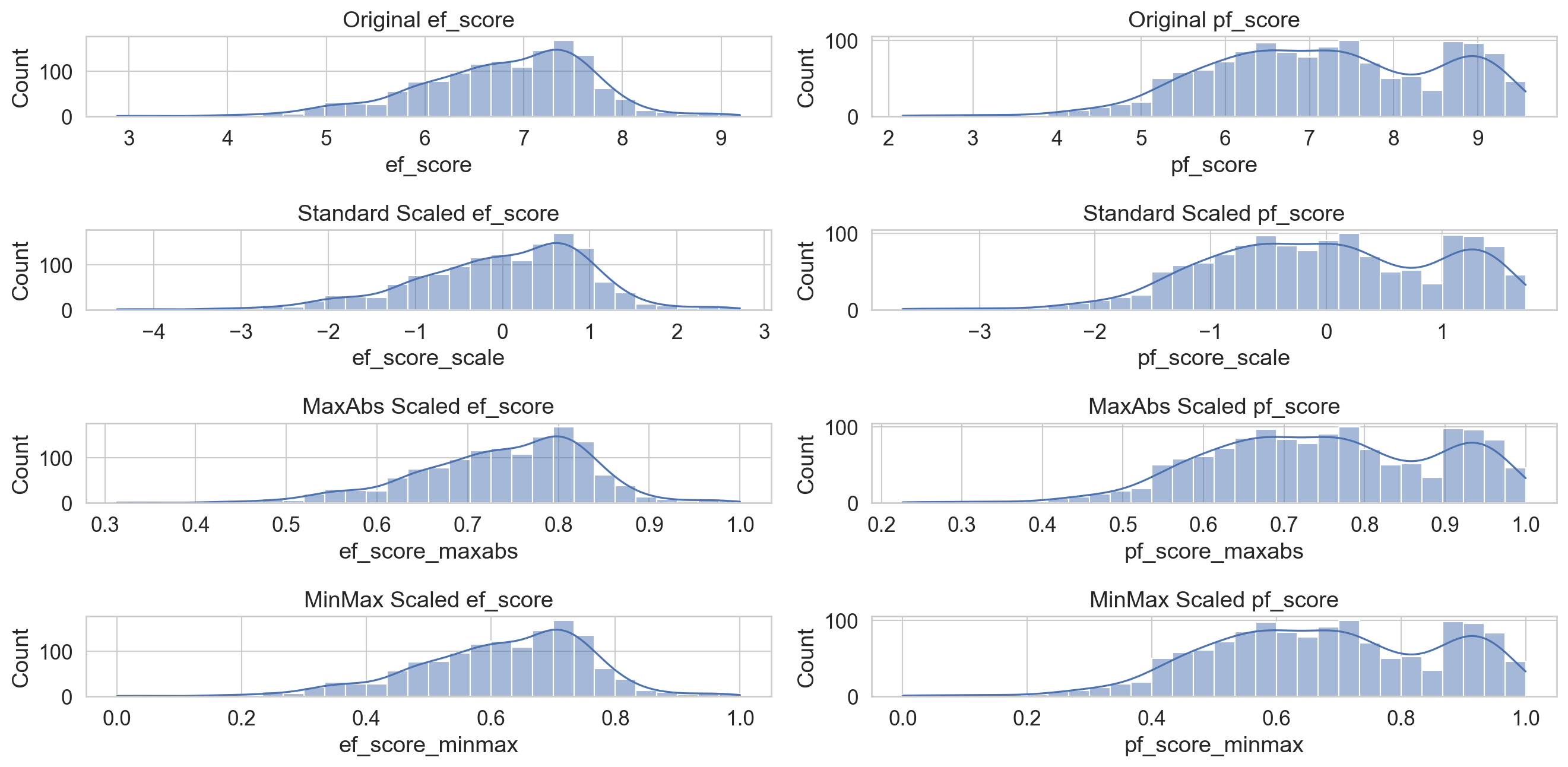

Normalizing

Mean: 5

Standard Deviation: 2

hfi_copy = hfi.copy()

scaler = StandardScaler()

hfi_copy[['ef_score_scale', 'pf_score_scale']] = scaler.fit_transform(hfi_copy[['ef_score', 'pf_score']])

hfi_copy[['ef_score_scale', 'pf_score_scale']].describe()| ef_score_scale | pf_score_scale | |

|---|---|---|

| count | 1.378000e+03 | 1.378000e+03 |

| mean | 4.524683e-16 | 2.062533e-17 |

| std | 1.000363e+00 | 1.000363e+00 |

| min | -4.421711e+00 | -3.663087e+00 |

| 25% | -6.063870e-01 | -7.303950e-01 |

| 50% | 1.295064e-01 | -8.926277e-03 |

| 75% | 7.068997e-01 | 9.081441e-01 |

| max | 2.722116e+00 | 1.722056e+00 |

min_max_scaler = MinMaxScaler()

hfi_copy[['ef_score_minmax', 'pf_score_minmax']] = min_max_scaler.fit_transform(hfi_copy[['ef_score', 'pf_score']])

hfi_copy[['ef_score_minmax', 'pf_score_minmax']].describe()| ef_score_minmax | pf_score_minmax | |

|---|---|---|

| count | 1378.000000 | 1378.000000 |

| mean | 0.618956 | 0.680221 |

| std | 0.140032 | 0.185764 |

| min | 0.000000 | 0.000000 |

| 25% | 0.534073 | 0.544589 |

| 50% | 0.637084 | 0.678563 |

| 75% | 0.717908 | 0.848860 |

| max | 1.000000 | 1.000000 |

max_abs_scaler = MaxAbsScaler()

hfi_copy[['ef_score_maxabs', 'pf_score_maxabs']] = max_abs_scaler.fit_transform(hfi_copy[['ef_score', 'pf_score']])

hfi_copy[['ef_score_maxabs', 'pf_score_maxabs']].describe()| ef_score_maxabs | pf_score_maxabs | |

|---|---|---|

| count | 1378.000000 | 1378.000000 |

| mean | 0.738369 | 0.752630 |

| std | 0.096148 | 0.143700 |

| min | 0.313384 | 0.226434 |

| 25% | 0.680087 | 0.647710 |

| 50% | 0.750816 | 0.751348 |

| 75% | 0.806311 | 0.883083 |

| max | 1.000000 | 1.000000 |

Visual comparison

Pros + cons (StandardScaler)

Pros:

Normalization of Variance: Centers data around zero with a standard deviation of one, suitable for algorithms assuming normally distributed data (e.g., linear regression, logistic regression, neural networks).

Preserves Relationships: Maintains ratios and differences between data points.

Cons:

Sensitive to Outliers: Outliers can distort scaled values.

Assumes Normality: Assumes data follows a Gaussian distribution.

Pros + cons (MaxAbsScaler)

Pros:

Outlier Resistant: Less sensitive to outliers, scales based on the absolute maximum value.

Preserves Sparsity: Does not center data, preserving the sparsity pattern.

Cons:

Scale Limitation: Scales to the range [-1, 1], which may not suit all algorithms.

Not Zero-Centered: May be a limitation for algorithms preferring zero-centered data.

Pros + cons (MinMaxScaler)

Pros:

Fixed Range: Scales data to a fixed range (usually [0, 1]), beneficial for algorithms sensitive to feature scales (e.g., neural networks, k-nearest neighbors).

Preserves Relationships: Maintains relationships between data points.

Cons:

Sensitive to Outliers: Outliers can skew scaled values.

Range Dependence: Scaling depends on the min and max values in the training data, which may not generalize well to new data.

Normality

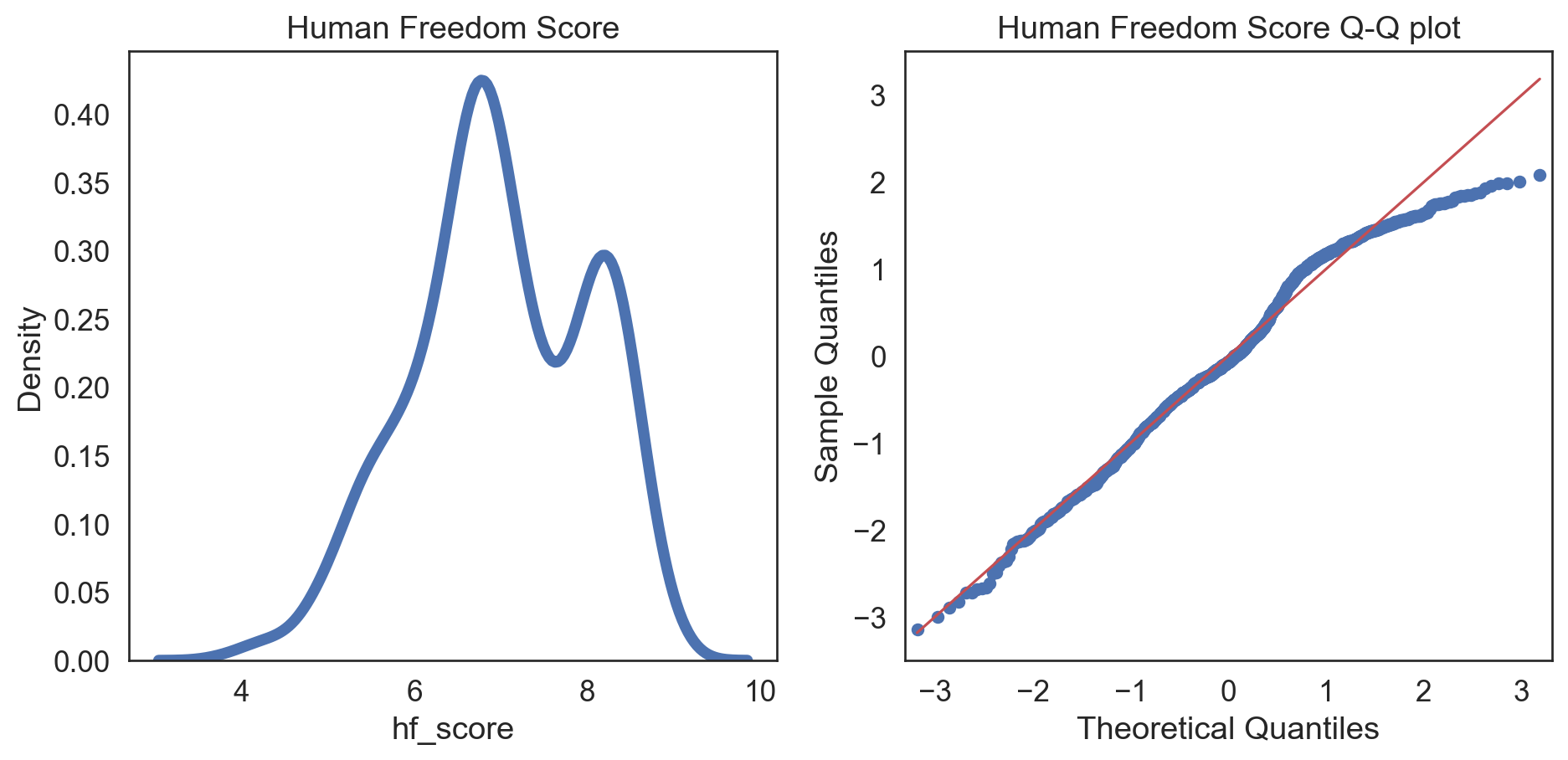

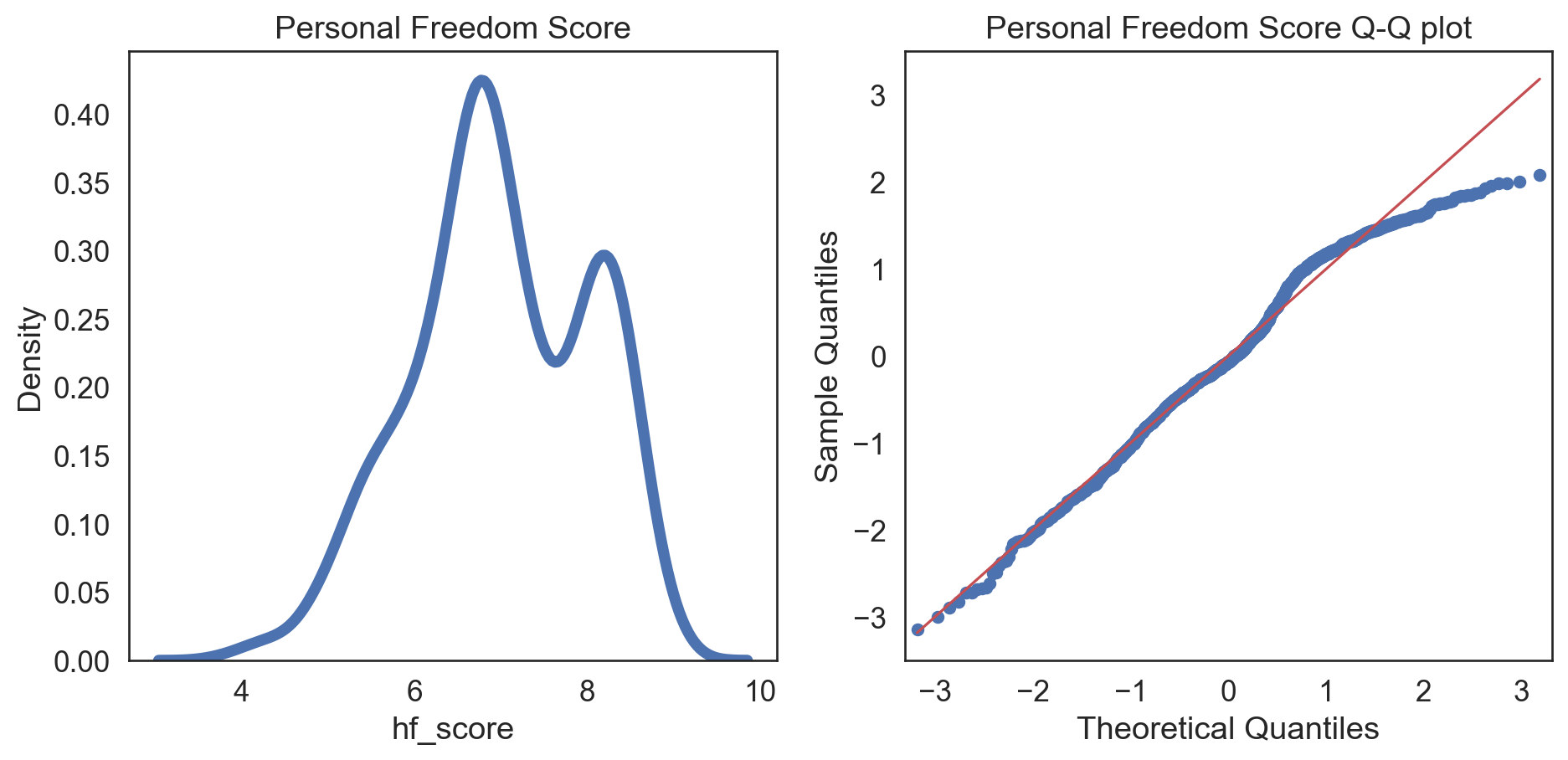

Normality test: Q-Q plot

Code

hfi_clean = hfi_copy.dropna(subset = ['pf_score'])

sns.set_style("white")

fig, (ax1, ax2) = plt.subplots(ncols = 2, nrows = 1)

sns.kdeplot(data = hfi_clean, x = "hf_score", linewidth = 5, ax = ax1)

ax1.set_title('Human Freedom Score')

sm.qqplot(hfi_clean['hf_score'], line = 's', ax = ax2, dist = stats.norm, fit = True)

ax2.set_title('Human Freedom Score Q-Q plot')

plt.tight_layout()

plt.show()

There were some issues in our plots:

Left Tail: Points deviate downwards from the line, indicating more extreme low values than a normal distribution (negative skewness).

Central Section: Points align closely with the line, suggesting the central data is similar to a normal distribution.

Right Tail: Points curve upwards, showing potential for extreme high values (positive skewness).

Correcting skew

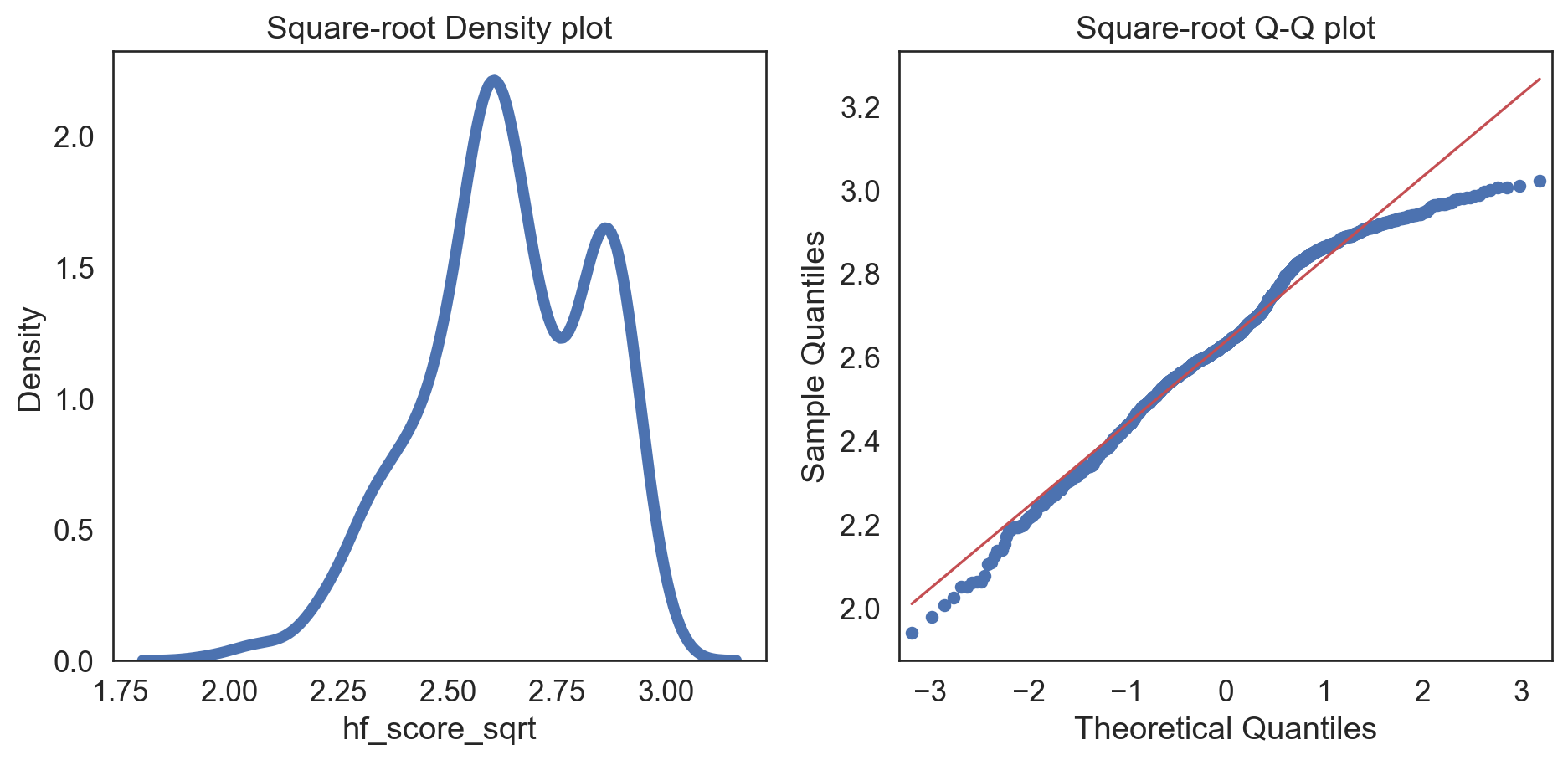

Square-root transformation. \(\sqrt x\) Used for moderately right-skew (positive skew)

- Cannot handle negative values (but can handle zeros)

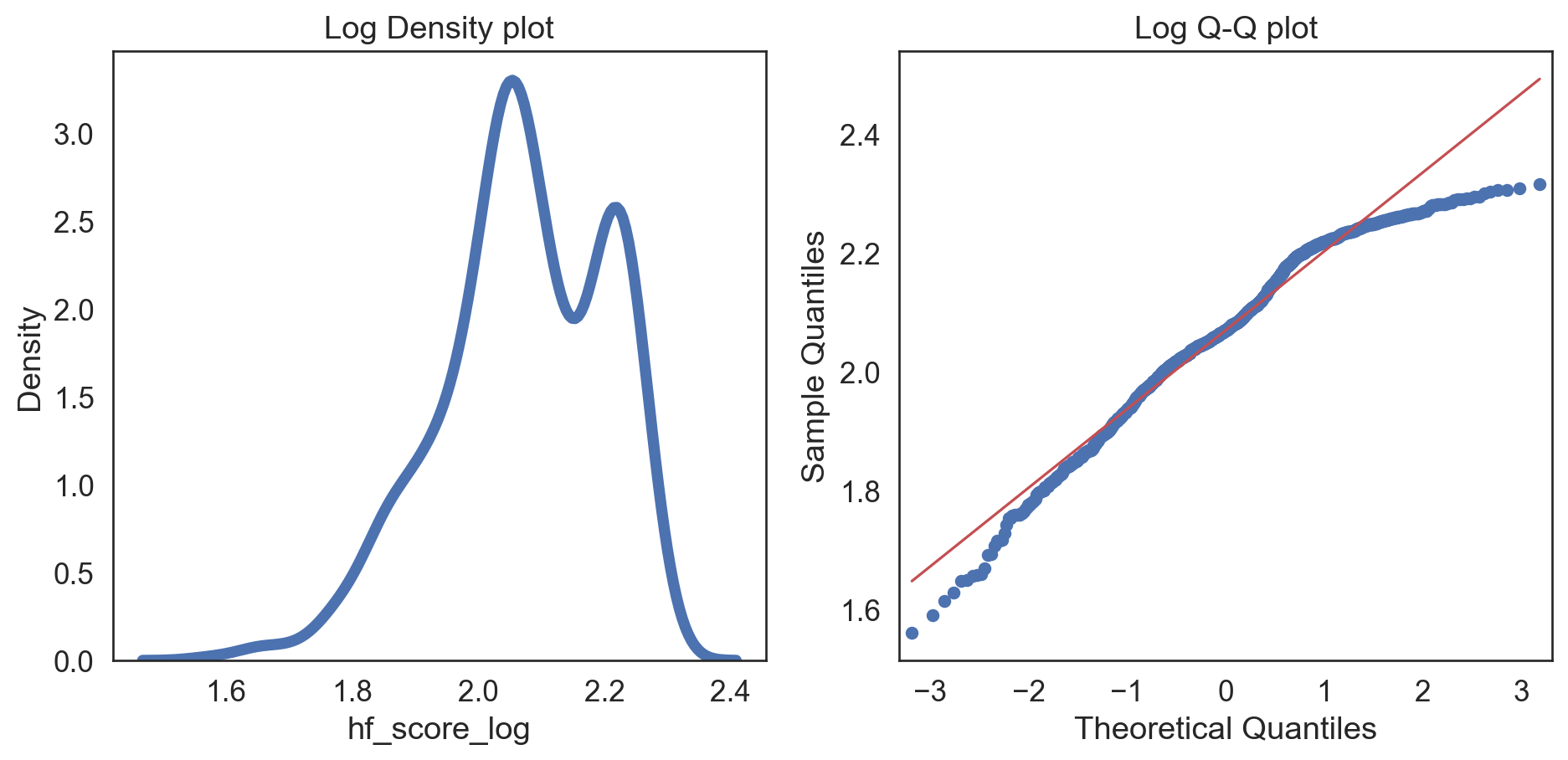

Log transformation. \(log(x + 1)\) Used for substantial right-skew (positive skew)

- Cannot handle negative or zero values

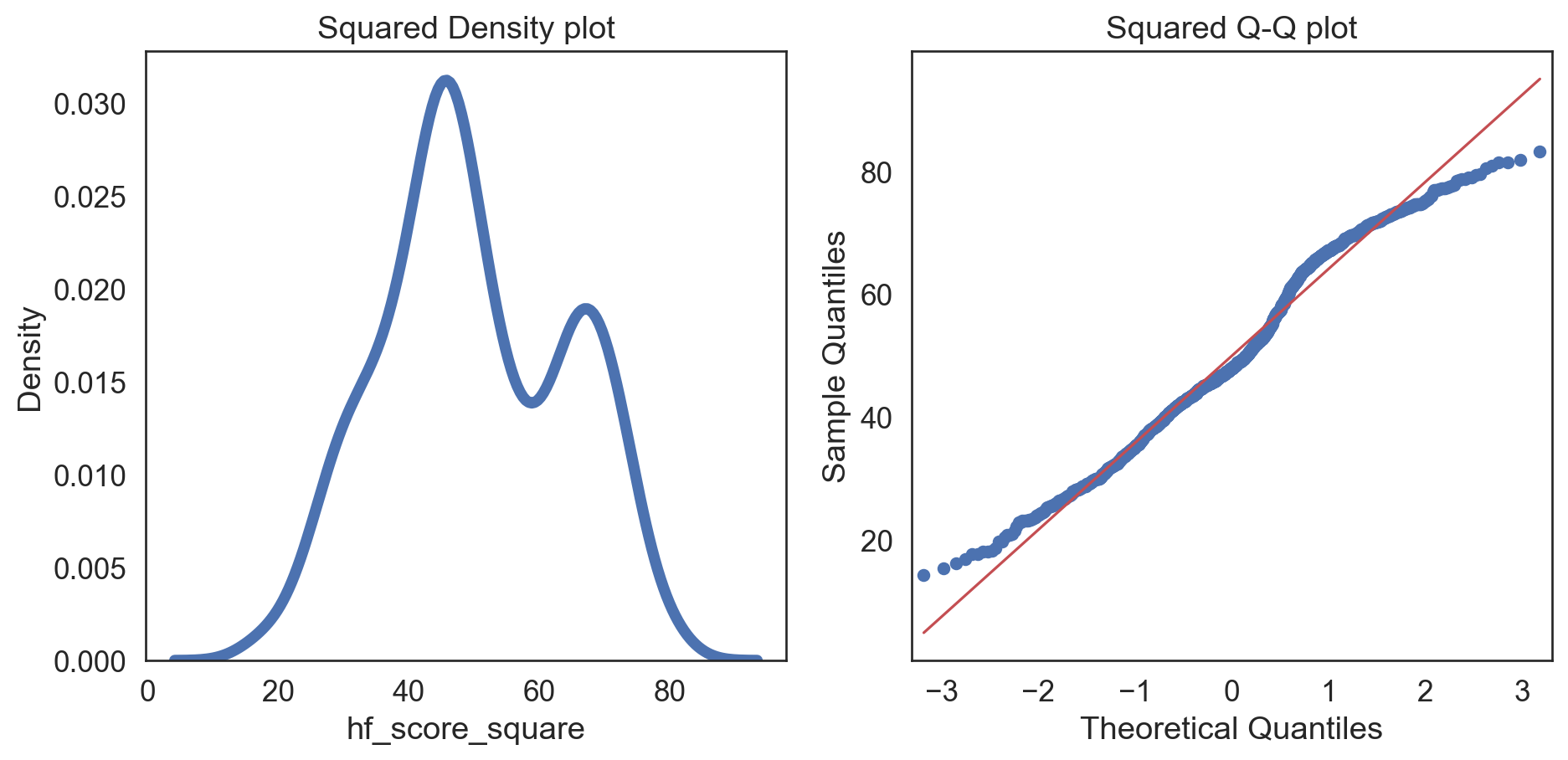

Squared transformation. \(x^2\) Used for moderately left-skew (negative skew)

- Effective when lower values are densely packed together

Comparing transformations

Moderate negative skew, no zeros or negative values

Code

hfi_clean['hf_score_sqrt'] = np.sqrt(hfi_clean['hf_score'])

col = hfi_clean['hf_score_sqrt']

fig, (ax1, ax2) = plt.subplots(ncols = 2, nrows = 1)

sns.kdeplot(col, linewidth = 5, ax = ax1)

ax1.set_title('Square-root Density plot')

sm.qqplot(col, line = 's', ax = ax2)

ax2.set_title('Square-root Q-Q plot')

plt.tight_layout()

plt.show()

Code

hfi_clean['hf_score_log'] = np.log(hfi_clean['hf_score'] + 1)

col = hfi_clean['hf_score_log']

fig, (ax1, ax2) = plt.subplots(ncols = 2, nrows = 1)

sns.kdeplot(col, linewidth = 5, ax = ax1)

ax1.set_title('Log Density plot')

sm.qqplot(col, line = 's', ax = ax2)

ax2.set_title('Log Q-Q plot')

plt.tight_layout()

plt.show()

Code

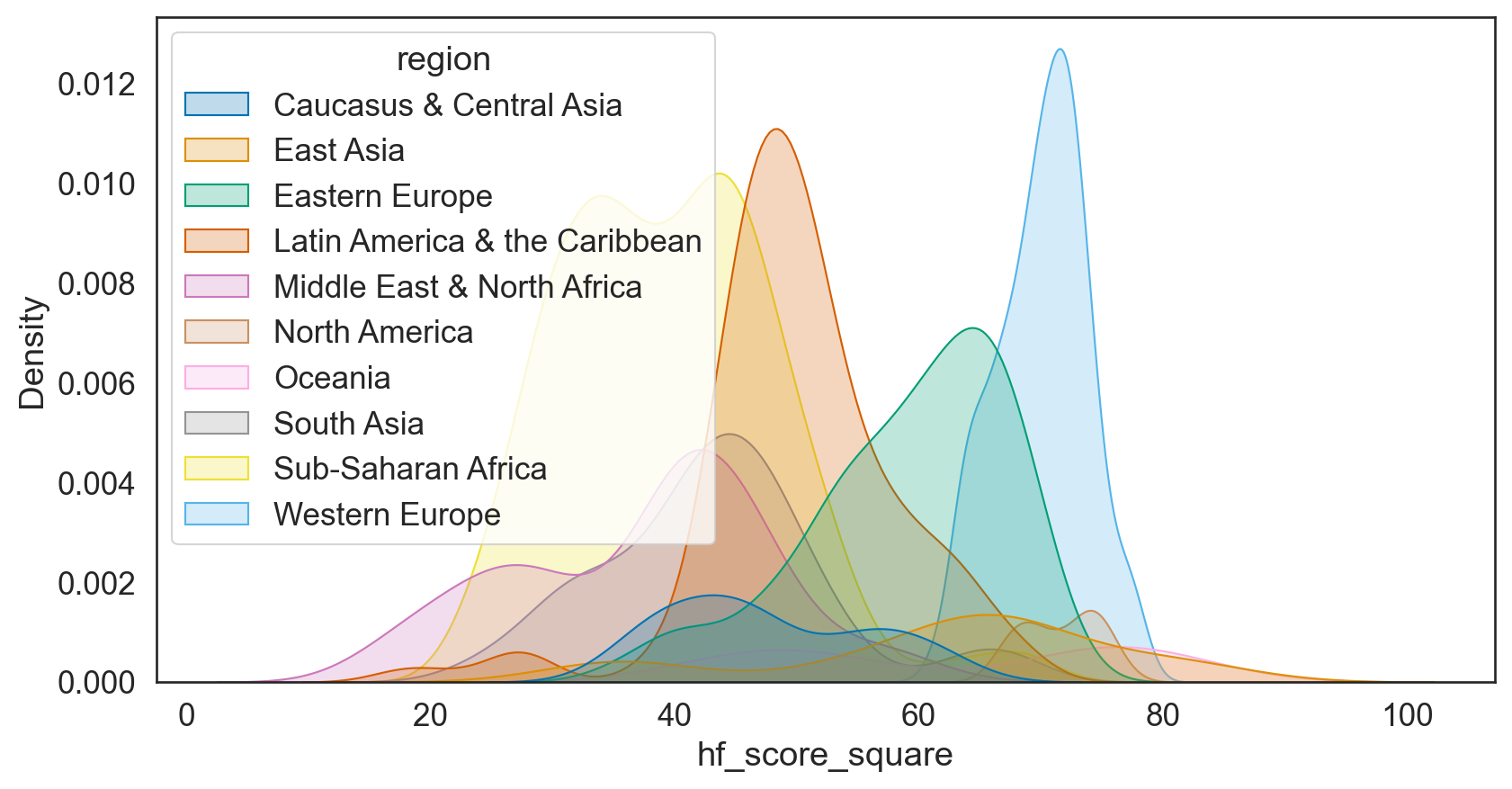

hfi_clean['hf_score_square'] = pow(hfi_clean.hf_score, 2)

col = hfi_clean['hf_score_square']

fig, (ax1, ax2) = plt.subplots(ncols = 2, nrows = 1)

sns.kdeplot(col, linewidth = 5, ax = ax1)

ax1.set_title('Squared Density plot')

sm.qqplot(col, line = 's', ax = ax2)

ax2.set_title('Squared Q-Q plot')

plt.tight_layout()

plt.show()

What did we learn?

Negative skew excluded all but Squared transformation

… thus, Squared transformation was the best

The data is bimodal, so no transformation is perfect

Answering our question

What trends are there within human freedom indices in different regions?

Probably…